Models

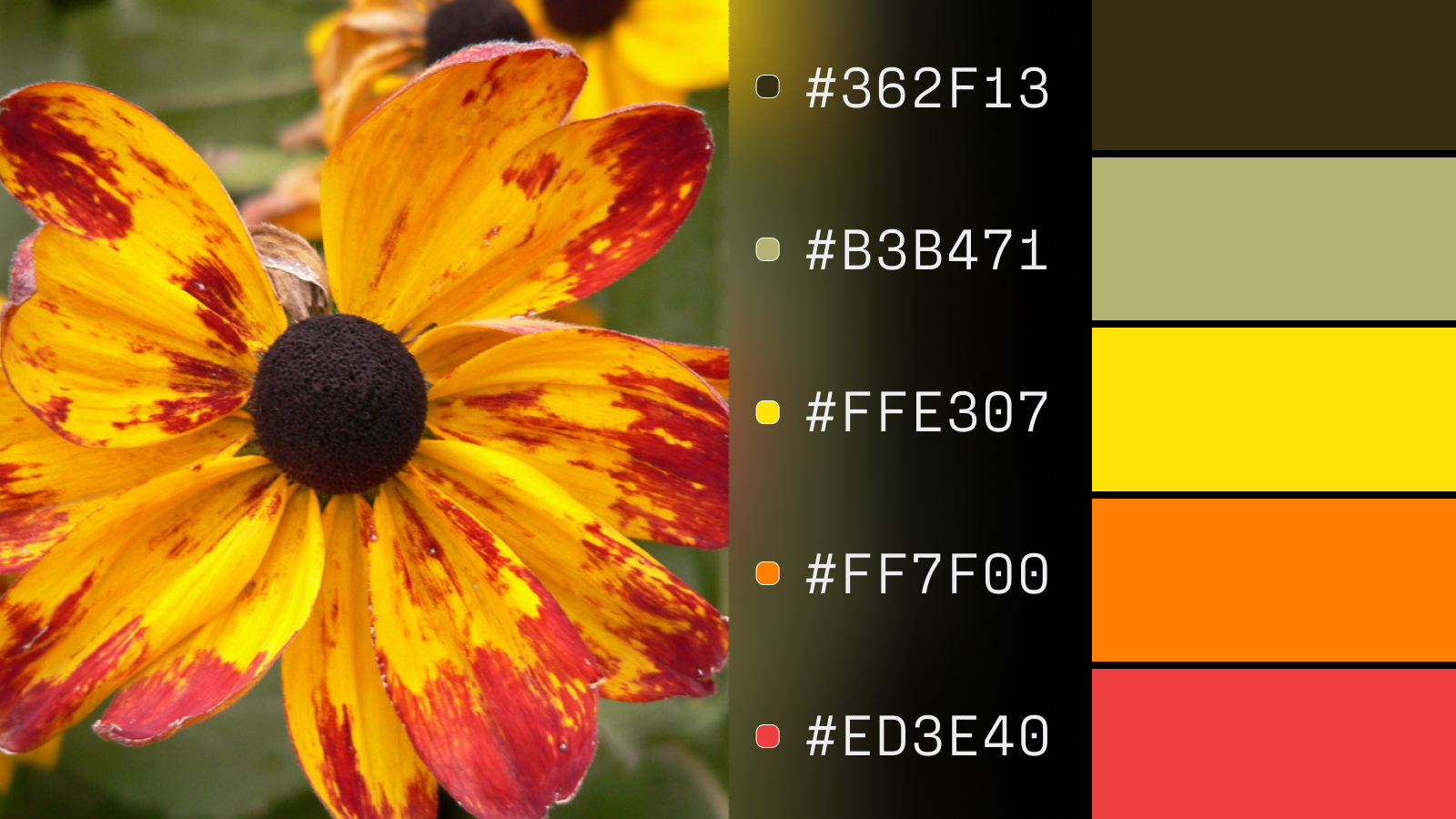

Color Extraction is a task in computer vision that involves the extraction and analysis of colors from images or videos. The objective of this task is to identify and isolate specific colors or color ranges present in the visual data.

Background Removal is an image processing technique used to separate the main object from the background of a photo. Removing the background helps highlight the product, subject, or character, bringing a professional and aesthetically pleasing look to the image.

The goal of Image to Anime was to create a new version of the image that would possess the same clean lines and evoke the characteristic feel found in anime productions, capturing the unique artistry and aesthetics associated with this style.

ZeroShot Image Classification CLIP is a task in the field of machine learning and image processing, aiming to predict the class or label of an image that has not been previously classified, in a dataset that the model has not been trained on with those classes.

Anime backgrounds, also known as anime backgrounds art or anime scenery, refer to the visual elements that form the backdrop of animated scenes in anime. These backgrounds are carefully designed and illustrated to provide the setting, atmosphere, and context for the characters and events within the anime.

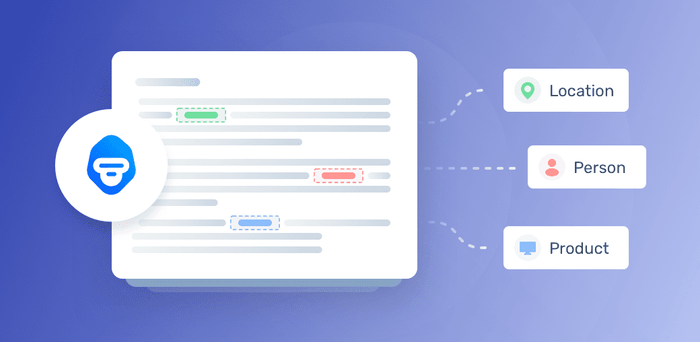

Named Entity Recognition with BERT utilizes cutting-edge technology to accurately identify and categorize named entities in textual data. By leveraging BERT's advanced capabilities, this tool streamlines information extraction processes by recognizing entities like names of individuals, organizations, and locations within text, enhancing text analysis efficiency.

Latest

Moodeng-Model-Pro-3.12 is our latest advanced AI solution designed to deliver robust performance and enhanced scalability across diverse applications. Built with cutting-edge deep learning and adaptive algorithms, this model offers improved accuracy in predictive analytics, natural language processing, and computer vision tasks. It seamlessly integrates with enterprise systems, enabling organizations to transform vast amounts of data into actionable insights. With optimized performance and reliability, Moodeng-Model-Pro-3.12 empowers businesses to streamline operations, boost efficiency, and drive innovation in the digital era.

META LLAMA 3 COMMUNITY LICENSE AGREEMENT Meta Llama 3 Version Release Date: April 18, 2024 "Agreement" means the terms and conditions for use, reproduction, distribution and modification of the Llama Materials set forth herein. "Documentation" means the specifications, manuals and documentation accompanying Meta Llama 3 distributed by Meta at https://llama.meta.com/get-started/. "Licensee" or "you" means you, or your employer or any other person or entity (if you are entering into this Agreement on such person or entity’s behalf), of the age required under applicable laws, rules or regulations to provide legal consent and that has legal authority to bind your employer or such other person or entity if you are entering in this Agreement on their behalf. "Meta Llama 3" means the foundational large language models and software and algorithms, including machine-learning model code, trained model weights, inference-enabling code, training-enabling code, fine-tuning enabling code

by @AIwithCat

Introducing the Capybara Super Model—the epitome of effortless charm and natural elegance. With a luxurious coat that gleams under the spotlight and eyes that captivate with every glance, this capybara redefines what it means to be a trendsetter in the wild. Balancing serene confidence with playful charisma, it strides the runway like a born icon, merging the laid-back grace of nature with the high-fashion flair of the modern era. Not just a pretty face, our super model is a symbol of sustainability and authenticity—an ambassador for wildlife and environmental harmony. Whether gracing the covers of exclusive magazines or starring in cutting-edge eco-friendly campaigns, the Capybara Super Model inspires us to celebrate beauty in its most genuine form. Step into a world where nature meets haute couture, and witness the extraordinary presence of the capybara that’s taking the world by storm.

Background Replacements task is a task in the field of image processing and computer vision, focusing on changing the background of an image. This task aims to remove the current background and replace it with a new background, creating a new image with a different environment or context. Changing the background can bring many benefits and applications in various fields. In photography and graphic design, Background Replacements allow the creation of creative images with diverse backgrounds, ranging from natural landscapes, cityscapes, and seascapes to special effects and unique styles. This helps create attention-grabbing images, make a strong impression, and convey messages more effectively. In advertising and marketing. Background Replacements are also used to create professional product images that attract customers. Combining Background Replacements with the MODNet model simplifies the process of changing the background and saves time compared to manual interventions.

ZeroShot Image Classification CLIP is a task in the field of machine learning and image processing, aiming to predict the class or label of an image that has not been previously classified, in a dataset that the model has not been trained on with those classes.

by @AIOZNetwork

Prompt Extend is an innovative approach that aims to enhance the capabilities of language models and improve their response generation. It involves extending the initial prompt or query by providing additional context or specifications to guide the model's understanding and generate more accurate and relevant responses.

by @AIOZNetwork

The goal of Image to Anime was to create a new version of the image that would possess the same clean lines and evoke the characteristic feel found in anime productions, capturing the unique artistry and aesthetics associated with this style.

by @AIOZNetwork

Dense Prediction for Vision Transformers is a task focused on applying Vision Transformers (ViTs) to dense prediction problems, such as object detection, semantic segmentation, and depth estimation. Unlike traditional image classification tasks, dense prediction involves making predictions for each pixel or region in an image.

by @AIOZNetwork

Chest X-rays classification with ViT is not a specific term or model that I am aware of in the context of pneumonia classification using X-ray images. However, I can provide you with a general overview of pneumonia classification using X-ray images and the role of vision transformers (ViTs) in image analysis tasks.

by @AIOZNetwork

The Metal Band Logos Classification task involves the classification or recognition of logos associated with metal music bands. It employs machine learning and computer vision techniques to analyze and categorize the visual characteristics of metal band logos.

by @AIOZNetwork

Musical instrument classification is the task of automatically recognizing and categorizing different musical instruments from audio recordings or spectrograms. It involves identifying the unique characteristics and sound patterns associated with each instrument to determine its class or type.

by @AIOZNetwork

Face detection is a computer vision technique that involves identifying and locating human faces within an image or video. The goal of face detection is to detect the presence of faces and draw bounding boxes around them, without necessarily identifying specific facial features or landmarks.

by @AIOZNetwork

Face mesh detection, also known as facial landmark detection or face pose estimation, is the task of identifying and localizing specific keypoints or landmarks on a human face. It involves detecting the positions of facial features, such as eyes, eyebrows, nose, mouth, and jawline, in an image or video.

by @AIOZNetwork

Weather identification refers to the process of recognizing and categorizing the current weather conditions based on various atmospheric parameters and observable phenomena. It involves analyzing weather patterns, atmospheric data, and visual cues to determine the prevailing weather conditions at a particular location and time.

by @AIOZNetwork

Sentiment classification is the automated process of identifying and classifying emotions in text as positive sentiment, negative sentiment, or neutral sentiment based on the opinions expressed within.

by @AIOZNetwork